Comparison Feature Selection Algorithms

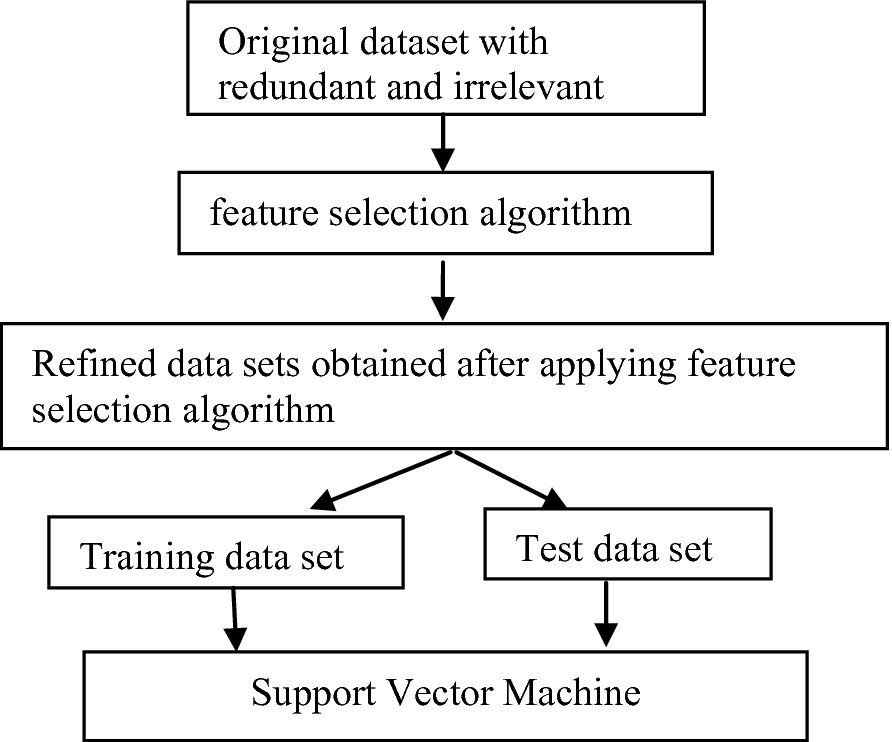

The process involves reducing the dimension of the input space by selecting a relevant subset of the.

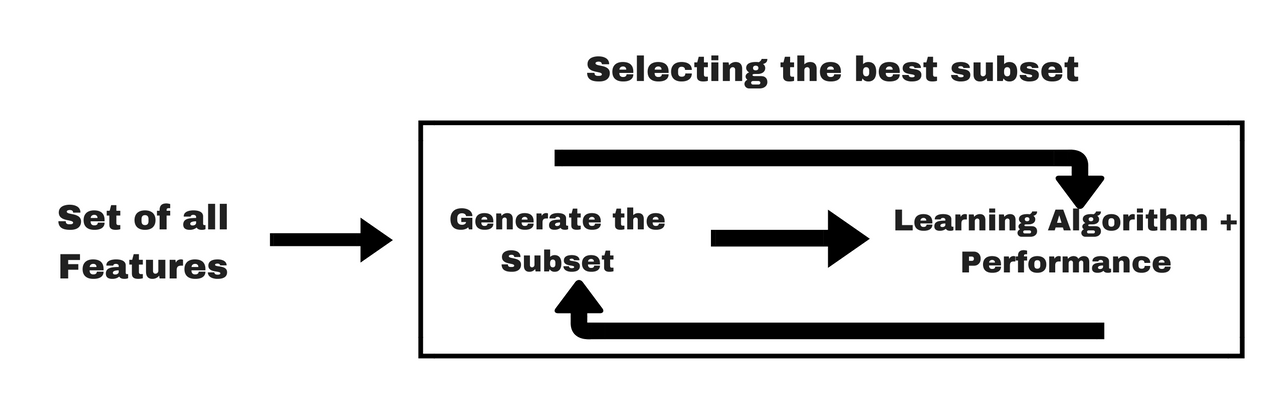

Comparison feature selection algorithms. Wrapper methods consider the selection of a set of features as a search problem. Embedded methods use algorithms that have built in feature selection methods. Feature selection in machine learning refers to the process of choosing the most relevant features in our data to give to our model.

This is where feature selection comes in. Feature selection evaluation feature selection evaluation aims to gauge the efficacy of a fs algorithm. Experiment al comparison s of feature selection and machine learning algorithms in multi class sentiment classification.

We often need to compare two fs algorithms a 1 a 2 without knowing true relevant features a conventional way of evaluating a 1 and a 2 is to evaluate the effect of selected features on classification accuracy in two steps. In evalua tion it is often required to compare a new proposed feature selection algorithm with existing ones. Feature selection fs is extensively studied in machine learning.

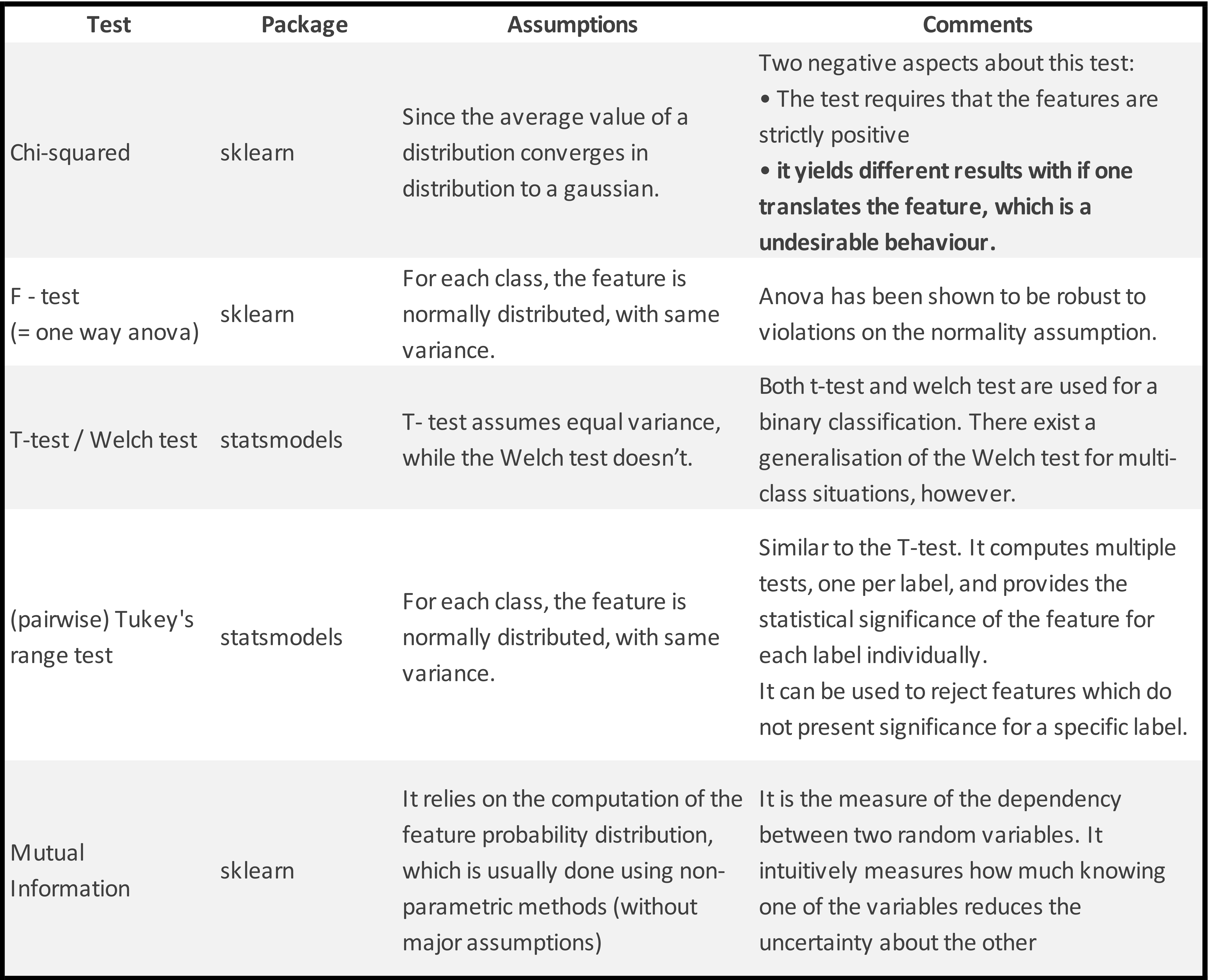

Many published algorithms are also implemented in the machine learning tools like r python etc. What would be an appropriate method to compare different feature selection algorithms and to select the best method for a given problem dataset. The performance and speed of three classifier specific feature selection algorithms the sequential forward backward floating search sffs sbfs algorithm the asffs asbfs algorithm its adaptive version and the genetic algorithm ga for large scale problems are compared.

My approach to determining the best set of features with rf is to. By limiting the number of features we use rather than just feeding the model the unmodified data we can often speed up training and improve accuracy or both. Feature selection is an important but often forgotten step in the machine learning pipeline.

Feature importance in random forest is determined by observing how often a given feature is used and the net impact on discrimination as measured by gini impurity or entropy. It boils down to the evaluation of its selected features and is an integral part of fs research. Without knowing true relevant features a conventional way of evaluating.