Randomized Feature Selection Algorithms

In random forest the final feature importance is the average of all decision tree feature importance.

Randomized feature selection algorithms. Selectkbest removes all but the k highest scoring features. They are highly accurate. Univariate feature selection works by selecting the best features based on univariate statistical tests.

Run a random forest classifier on the extended data with the random shadow features included. Abstract feature selection is the problem of identifying a subset of the most relevant features in the context of model construction. They are generic in nature and can be applied for any learning algorithm.

It is generic in nature and can be applied for any. This problem has been well studied and plays a vital role in machine learning. Boruta is a feature ranking and selection algorithm based on random forests algorithm.

Scikit learn exposes feature selection routines as objects that implement the transform method. In this algorithm we first evaluate the performance of the model with respect to each of the features in the dataset. Feature selection using random fo r est comes under the category of embedded methods.

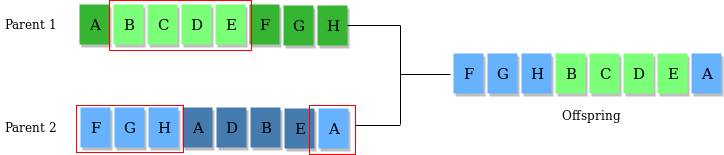

38 proposed a randomized feature selection rfs algorithm. In this paper we present three randomized algorithms for feature selection. Then rank the features using a feature importance metric the original algorithm used permutation.

In this paper we present a novel randomized algorithm for feature selection. In 2015 saha et al. The advantage with boruta is that it clearly decides if a variable is important or not and helps to select variables that are statistically significant.