Feature Selection Algorithms In Weka

Open the pima indians dataset.

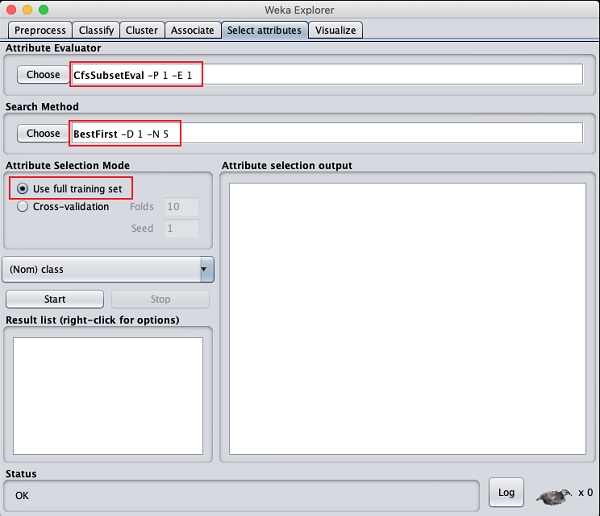

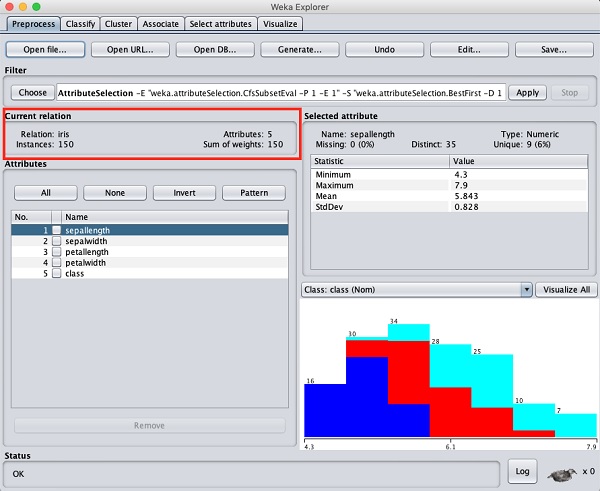

Feature selection algorithms in weka. The more algorithms that you can try on your problem the more you will learn about your problem and likely closer you will get to discovering the one or few algorithms that perform best. When you load the data you will see the following screen. You can run feature selection before from the select attributes tab in weka explorer and see which features are important.

The general feature selection approach can be used in weka from the select attributes panel the geneticsearch method is available there in old versions of weka like 3 6 14. Fortunately weka provides an automated tool for feature selection. A big benefit of using the weka platform is the large number of supported machine learning algorithms.

In the preprocess tag of the weka explorer select the labor arff file for loading into the system. This tutorial shows you how you can use weka explorer to select the features from your feature vector for classification task wrapper method. Feature selection in weka.

Many feature selection techniques are supported in weka. Kick start your project with my new book machine learning mastery with weka including step by step tutorials and clear screenshots for all examples. A good place to get started exploring feature selection in weka is in the weka explorer.

Also you can test classifiers such as svm libsvm or smo neural network multilayerperceptron and or random forest as they tend to give the best classification results in general problem dependent. This chapter demonstrate this feature on a database containing a large number of attributes. Click the explorer button to launch the explorer.

Finally the weka implementation uses a ridge regularization technique in order to reduce the complexity of the learned model. This is enabled by default and can be disabled. Thus removing the unwanted attributes from the dataset becomes an important task in developing a good machine learning model.