Feature Selection Algorithm Example

In this post you will learn about one of feature selection techniques namely sequential forward selection with python code example.

Feature selection algorithm example. This feature selection technique is very useful in selecting those features with the help of statistical testing having strongest relationship with the prediction variables. Here we use lasso to select variables. This is an embedded method.

Your results may vary given the stochastic nature of the algorithm or evaluation procedure or differences in numerical precision. Sequential forward selection algorithm is a part of sequential feature selection algorithms. Relief algorithms have been mostly viewed as a feature subset selection method that are applied in a prepossessing step before the model is learned and are one of the most successful preprocessing.

Feature importance in random forest is determined by observing how often a given feature is used and the net impact on discrimination as measured by gini impurity or entropy. Consider running the example a few times and compare the average outcome. Forward selection is an iterative method in which we start with having no feature in the model.

Forward feature selection the converse of rfe goes by a variety of names one of which is forward feature selection. Rmse rsquared subset selection driven to minimize internal rmse external performance values. For example lasso and rf have their own feature selection methods.

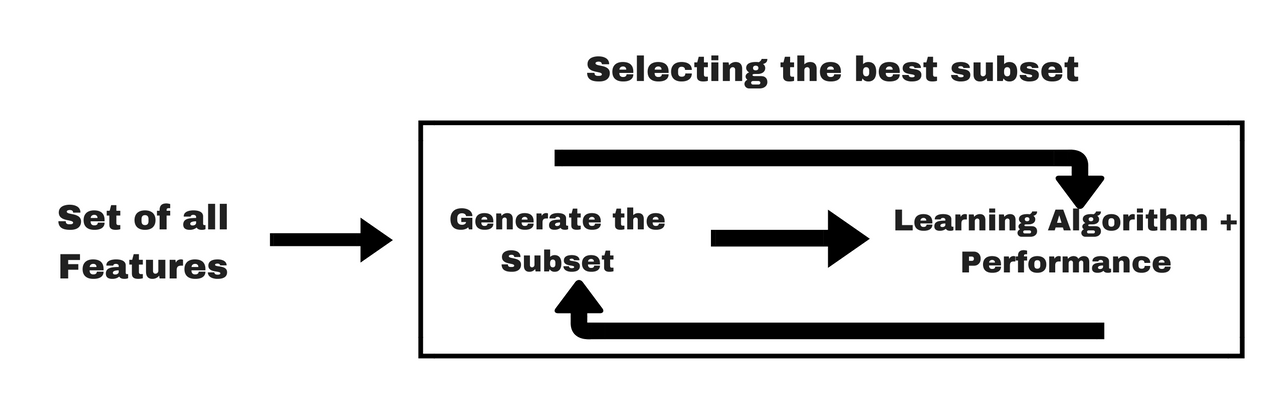

Recursive feature elimination or rfe for short is a popular feature selection algorithm. Some common examples of wrapper methods are forward feature selection backward feature elimination recursive feature elimination etc. There are two important configuration options when using rfe.

0 internal performance values. Lasso regularizer forces a lot of feature weights to be zero. Genetic algorithm feature selection 366 samples 12 predictors maximum generations.