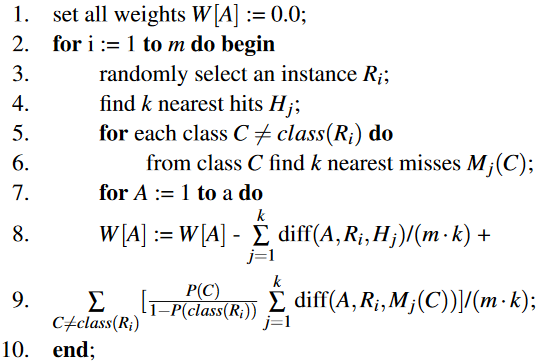

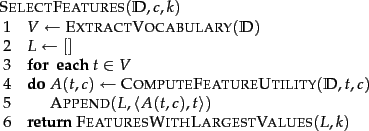

Feature Selection Algorithm Code

Setfeatureeachround 50 false set number of feature each round and set how the features are selected from all features true.

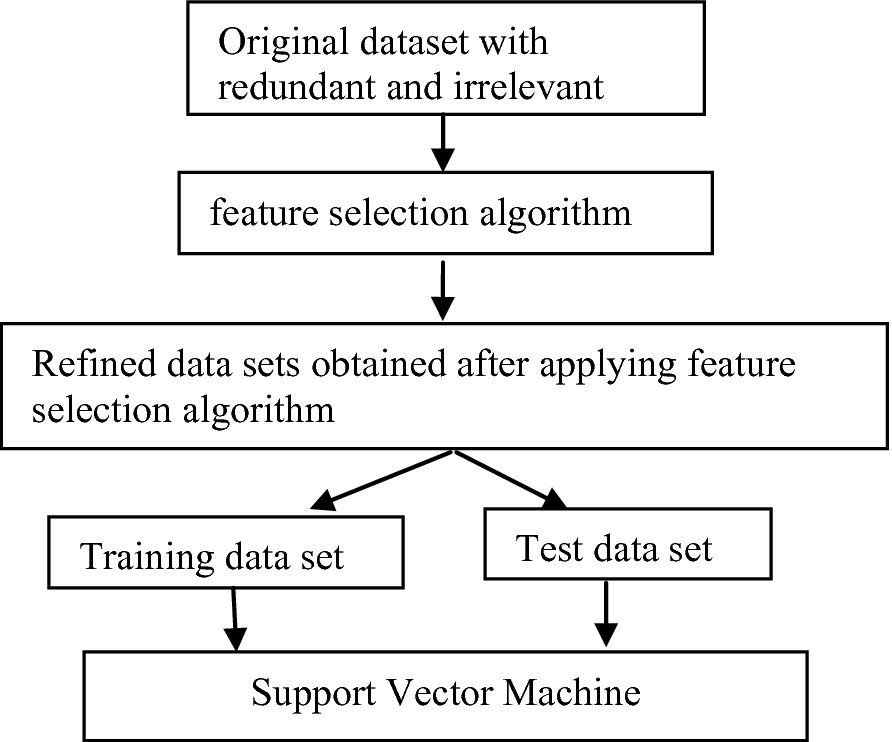

Feature selection algorithm code. Feature selection is one of the core concepts in machine learning which hugely impacts the performance of your model. In random forest the final feature importance is the average of all decision tree feature importance. This repository contains the code related to natural language processing using python scripting language.

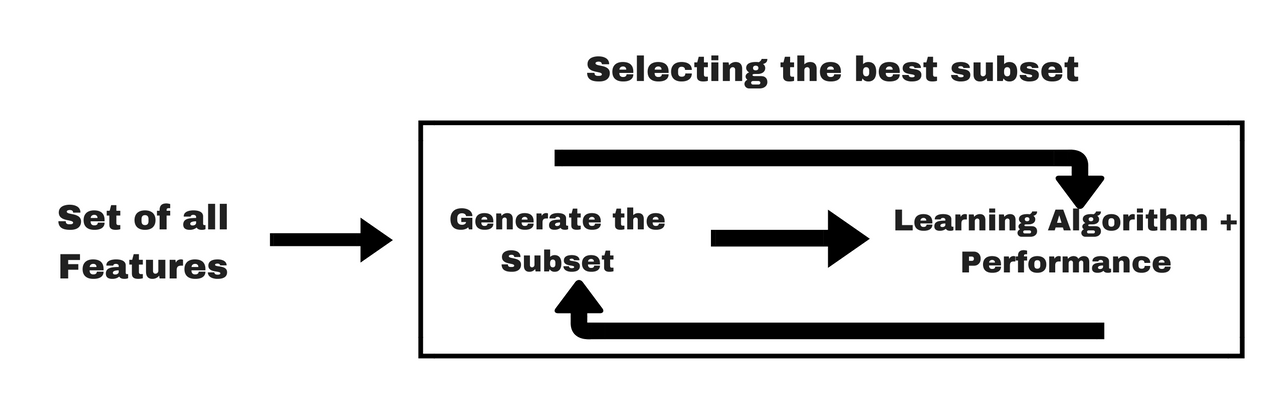

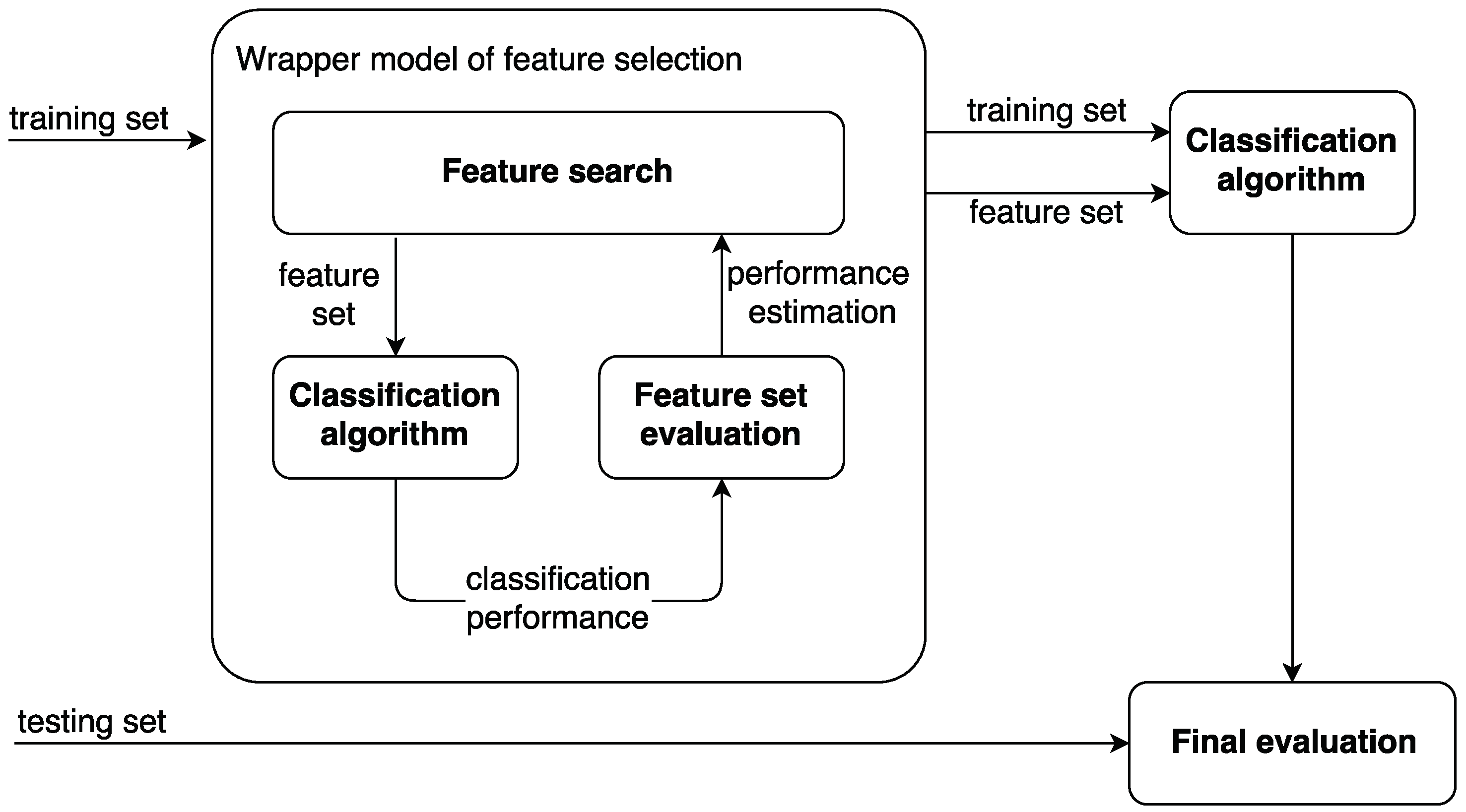

Rfe is popular because it is easy to configure and use and because it is effective at selecting those features columns in a training dataset that are more or most relevant in predicting the target variable. The choice in the. As said before embedded methods use algorithms that have built in feature selection methods.

Generatecol generate features for selection sf. Python machine learning algorithms nonlinear feature selection feature. A learning algorithm takes advantage of its own variable selection process and performs feature selection and classification simultaneously such as the frmt algorithm.

Your results may vary given the stochastic nature of the algorithm or evaluation procedure or differences in numerical precision. Clf logisticregression set the selected algorithm can be any algorithm sf. Fewer data points reduce algorithm complexity and algorithms train.

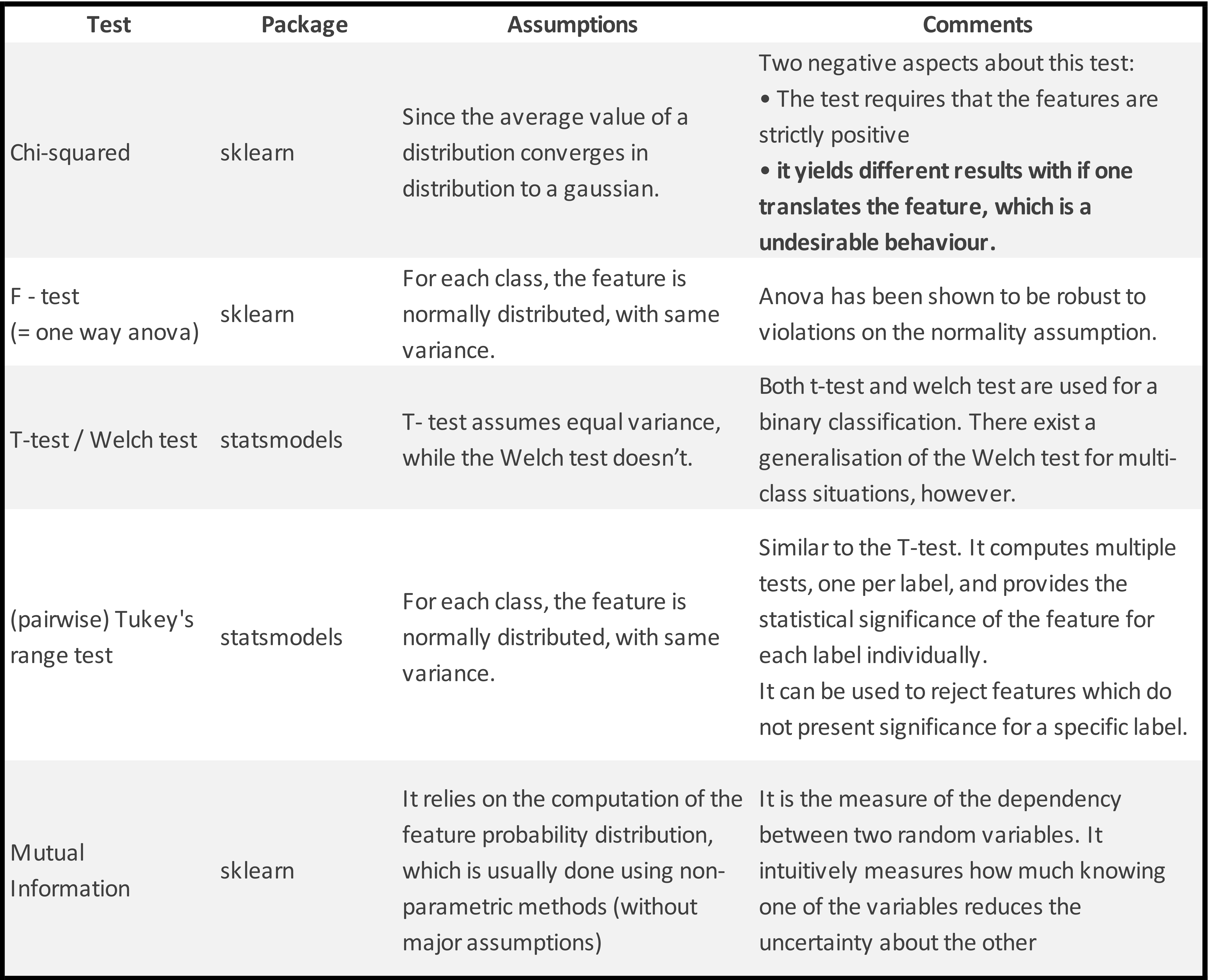

We calculate feature importance using node impurities in each decision tree. For help on which statistical measure to use for your data see the tutorial. We can also use randomforest to select features based on feature importance.

Application of feature selection metaheuristics. Select chunk by chunk sf. Recursive feature elimination or rfe for short is a popular feature selection algorithm.